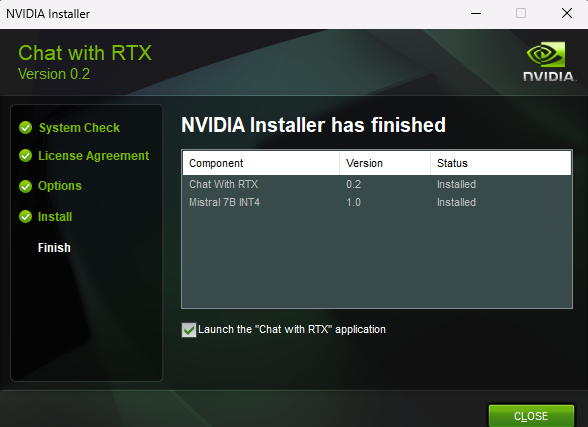

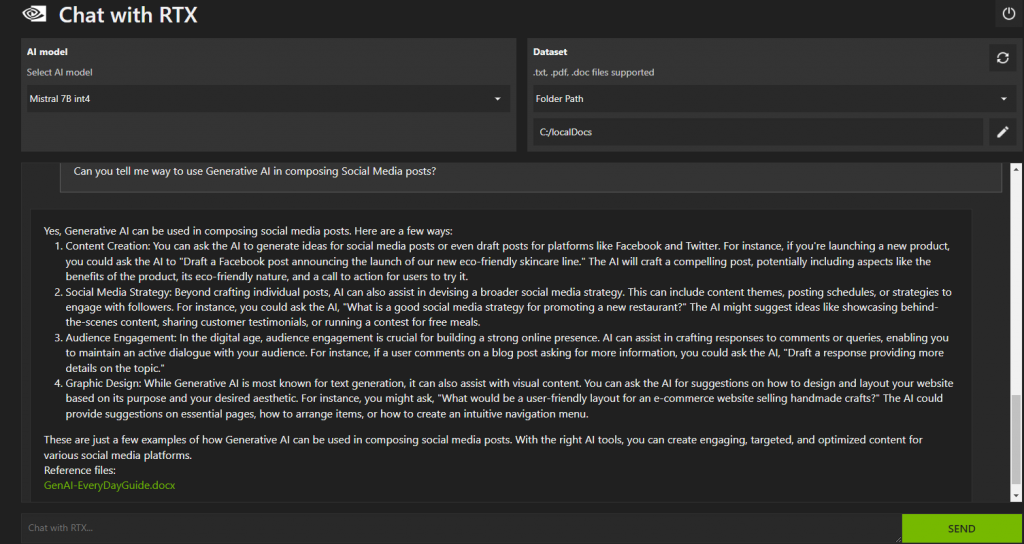

NVIDIA has rolled out a new tool, “Chat with RTX,” offering a practical approach to integrating AI-driven chat capabilities with personal content. This application allows users to feed their own documents, notes, and videos into a chatbot, leveraging technologies such as retrieval-augmented generation (RAG), TensorRT-LLM, and RTX acceleration. Designed to run on Windows RTX PCs or workstations, it ensures fast and secure access to contextually relevant answers, drawing from the user’s locally stored data.

“Chat with RTX” distinguishes itself by supporting a broad range of file formats, including text, PDF, DOC/DOCX, and XML files. Users can quickly point the app towards their files, and it swiftly incorporates them into its library. Furthermore, the app can process YouTube playlist URLs, transcribing video content to expand the chatbot’s knowledge base. This functionality enables users to extract information and answers from their own curated content effectively.

For developers, the tool serves as a tech demo built on the TensorRT-LLM RAG developer reference project, which is available on GitHub. This offers a foundation for developing and deploying RAG-based applications that benefit from RTX acceleration, catering to a niche that demands high performance for AI applications on local machines.

One of the appealing features of Chat with RTX is its ease of use for everyday tasks. Instead of manually combing through files or notes, users can simply input queries to get specific information. For instance, finding a recommended restaurant or recalling specific details from a document becomes a matter of asking the right questions, with the chatbot pulling answers from the user’s content.

Moreover, Chat with RTX is free to download, making it an accessible option for those interested in personalizing their AI chatbot experience. To run this tool, users will need a machine equipped with a NVIDIA GeForce RTX 30 Series GPU or higher, with at least 8GB of video random access memory (VRAM). The system requirements also include Windows 11, at least 16GB of RAM, and the latest NVIDIA GPU drivers to ensure optimal performance. Chat with RTX can be downloaded at NVIDIA’s website from here.

While “Chat with RTX” may not revolutionize the AI landscape, it presents a valuable and practical tool for enhancing personal productivity and information retrieval. By running locally on user’s machines, it offers a secure way to leverage AI without the concerns of cloud-based processing. For those with the requisite hardware, it promises to be a useful addition to their digital toolkit, especially with its no-cost availability and support for a wide range of content formats.