This week, NVIDIA introduced “Chat with RTX,” a new tool that’s caught the attention of AI enthusiasts and productivity seekers alike. As someone always on the lookout for ways to streamline my digital workflow, I couldn’t resist diving into this application for a firsthand experience. Designed to integrate AI-driven chat capabilities with personal content, Chat with RTX leverages advanced technologies to provide users with fast and secure access to contextually relevant information from their locally stored data.

Before getting into the specifics of my experience, it’s worth noting the system requirements for running Chat with RTX: an NVIDIA GeForce RTX 30 or 40 Series GPU, at least 16GB of RAM, Windows 11, and the latest NVIDIA driver. My desktop, equipped with a Core i9-11000, 32GB of RAM, and an NVIDIA 3070 GPU, fit the bill perfectly.

The setup process was straightforward, albeit with a hefty download size of 35GB, including the Mistral 7B and Llama open-weights LLMs. An immediate hiccup was that, despite Llama being included in the download, it didn’t appear as an available option in the app. This was a minor disappointment, given the anticipation of testing both LLM options.

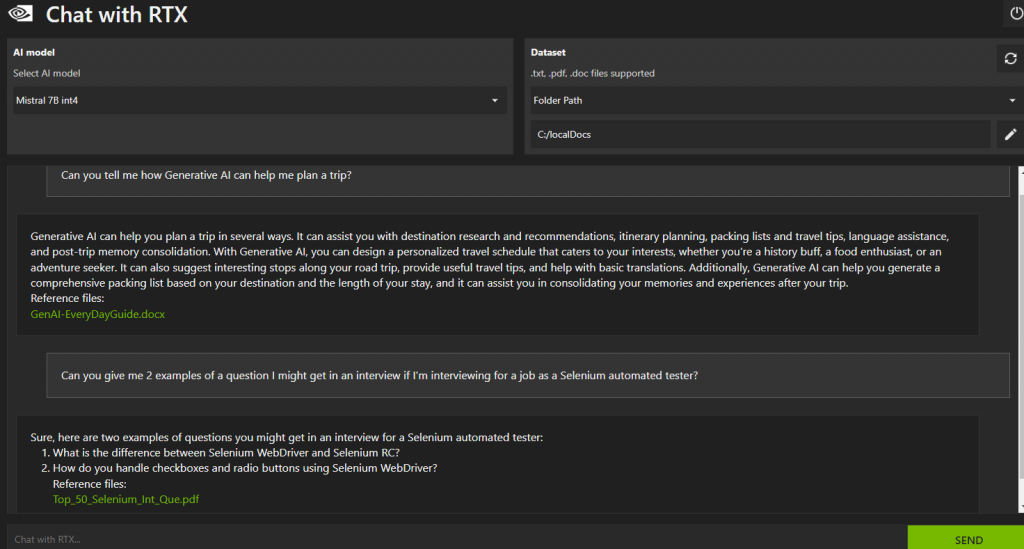

I tested the app by directing it to a folder containing 7 PDFs and 3 Word documents. It took roughly 5 minutes to process these files, a reasonable wait for the promise of instant, accurate information retrieval. True to its word, Chat with RTX delivered impressively. For every query I posed based on the content of these documents, the application not only provided accurate answers but also cited the specific document from which it pulled the information and provided a link to bring up the document.

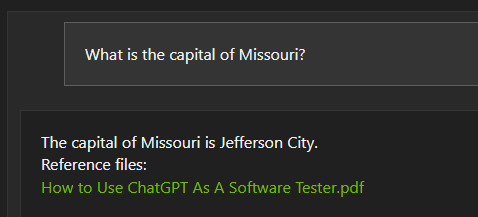

I asked a few questions unrelated to my documents, and the chatbot still managed to provide mostly accurate responses. However, I encountered a bug where it incorrectly attributed answers to my local files, even when the information came from its built-in knowledge base. This is a point NVIDIA might need to address in future updates.

The potential of Chat with RTX is undeniable. It transforms the way we interact with our stored data, making it possible to access information with unprecedented ease. Instead of manually sifting through documents, a simple query can now unearth the needed details instantly.

While there are areas for improvement, such as the Llama LLM availability and the misattribution bug, the tool’s core functionality impresses. For individuals and professionals looking to leverage their local data more efficiently, Chat with RTX presents a novel solution. It stands as a testament to NVIDIA’s commitment to advancing AI technology in practical, user-friendly ways. Whether you’re researching, organizing notes, or just trying to recall a specific piece of information, Chat with RTX offers a glimpse into the future of personal content management.